[Pytorch] Supervised Contrastive Learning 🔥

Original Notebook : https://www.kaggle.com/code/debarshichanda/pytorch-supervised-contrastive-learning

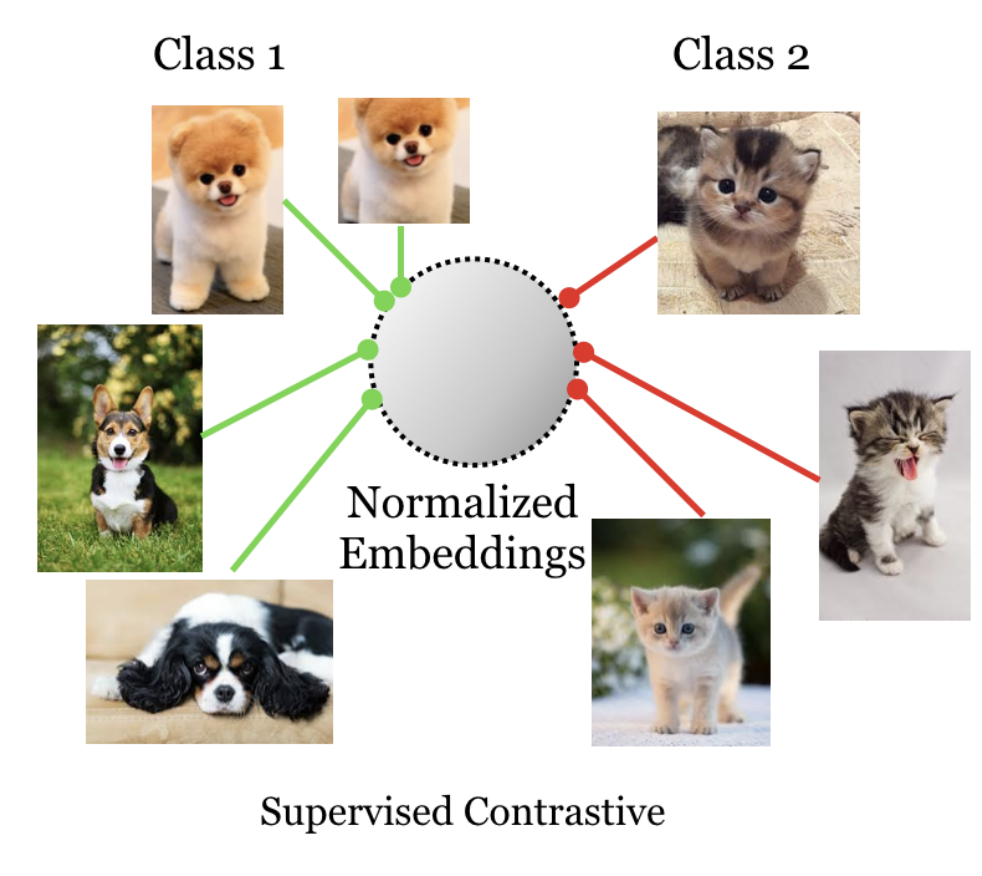

SUPERVISED CONTRASTIVE LEARNING

Contrastive learning applied to self-supervised representation learning has seen a resurgence in recent years, leading to state of the art performance in the unsupervised training of deep image models. modern batch contrastive approaches subsume or significantly outperform traditional contrastive losses such as triplet, max-margin and the N-pairs loss. In this work, we extend the self-supervised batch contrastive approach to the fully-supervised setting, allowing us to effectively leverage label information. Clusters of points belonging to the same class are pulled together in embedding space, while simultaneously pushing apart clusters of samples from differenet classes. We analyze two possible ersions of the supervised contrastive (Supcon) loss, identifying the best-performing formulation of the loss. On resNet-200, we achieve top-1 accuarcy of 81.4% on the ImageNet dataset, which is 0.8% above the best number reported for this architecture. We show consistent outperformance over cross-entropy on other datasets and two ResNet variants. The loss shows benefits for robustness to natural corruptions and is more stable to hyperparameter settings such as optimizers and dtat augmentations

Supervised Contrastive Learning : https://arxiv.org/abs/2004.11362

Install Libraries

!pip install -q timm pytorch-metric-learning

Import Packages

import os

import cv2

import copy

import time

import random

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

import torch

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

from torch.optim import lr_scheduler

from torch.utils.data import DataLoader, Dataset

from torch.cuda import amp

from sklearn.preprocessing import LabelEncoder

from sklearn.model_selection import train_test_split, StratifiedKFold, GroupKFold

from sklearn.metrics import roc_auc_score, f1_score

from tqdm.notebook import tqdm

from collections import defaultdict

import albumentations as A

from albumentations.pytorch import ToTensorV2

import timm

from pytorch_metric_learning import losses

Training Configuration

class CFG:

seed = 42

model_name = "tf_efficientnet_b4_ns"

img_size = 512

scheduler = "CosineAnnealingLR"

T_max = 10

lr = 1e-5

min_lr = 1e-6

batch_size = 16

weight_decay = 1e-6

num_epochs = 10

num_classes = 11014

embedding_size = 512

n_fold = 5

n_accumulate = 4

temperature = 0.1

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

TRAIN_DIR = "./data/train_images"

TEST_DIR = "./data/test_images"

Set Seed for Reproducibility

def set_seed(seed = 42):

"""

Sets the seed of the entire notebook so results are the same every time we run.

This is for REPRODUCIBILITY.

"""

np.random.seed(seed)

random.seed(seed)

torch.manual_seed(seed)

torch.cuda.manual_seed(seed)

# When running on the Cudnn backend, two further options must be set

torch.backends.cudnn.deterministic = True

torch.backends.cudnn.benchmark = True

# Set a fixed value for the hash seed

os.environ['PYTHONHASHSEED'] = str(seed)

set_seed(CFG.seed)

df_train = pd.read_csv("./data/train.csv")

df_train['file_path'] = df_train.image.apply(lambda x: os.path.join(TRAIN_DIR, x))

df_train.head(5)

#> posting_id ... file_path

#> 0 train_129225211 ... ./data/train_images/0000a68812bc7e98c42888dfb1...

#> 1 train_3386243561 ... ./data/train_images/00039780dfc94d01db8676fe78...

#> 2 train_2288590299 ... ./data/train_images/000a190fdd715a2a36faed16e2...

#> 3 train_2406599165 ... ./data/train_images/00117e4fc239b1b641ff08340b...

#> 4 train_3369186413 ... ./data/train_images/00136d1cf4edede0203f32f05f...

#>

#> [5 rows x 6 columns]

le = LabelEncoder()

df_train.label_group = le.fit_transform(df_train.label_group)

df_train

| posting_id | image | image_phash | title | label_group | file_path |

|---|---|---|---|---|---|

| train_129225211 | 0000a68812bc7e98c42888dfb1c07da0.jpg | 94974f937d4c2433 | Paper Bag Victoria Secret | 666 | ./data/train_images/0000a68812bc7e98c42888dfb1c07da0.jpg |

| train_3386243561 | 00039780dfc94d01db8676fe789ecd05.jpg | af3f9460c2838f0f | Double Tape 3M VHB 12 mm x 4,5 m ORIGINAL / DOUBLE FOAM TAPE | 7572 | ./data/train_images/00039780dfc94d01db8676fe789ecd05.jpg |

| train_2288590299 | 000a190fdd715a2a36faed16e2c65df7.jpg | b94cb00ed3e50f78 | Maling TTS Canned Pork Luncheon Meat 397 gr | 6172 | ./data/train_images/000a190fdd715a2a36faed16e2c65df7.jpg |

| train_2406599165 | 00117e4fc239b1b641ff08340b429633.jpg | 8514fc58eafea283 | Daster Batik Lengan pendek - Motif Acak / Campur - Leher Kancing (DPT001-00) Batik karakter Alhadi | 10509 | ./data/train_images/00117e4fc239b1b641ff08340b429633.jpg |

| train_3369186413 | 00136d1cf4edede0203f32f05f660588.jpg | a6f319f924ad708c | Nescafe \xc3\x89clair Latte 220ml | 9425 | ./data/train_images/00136d1cf4edede0203f32f05f660588.jpg |

| train_2464356923 | 0013e7355ffc5ff8fb1ccad3e42d92fe.jpg | bbd097a7870f4a50 | CELANA WANITA (BB 45-84 KG)Harem wanita (bisa cod) | 6836 | ./data/train_images/0013e7355ffc5ff8fb1ccad3e42d92fe.jpg |

Dataset Class

class ShopeeDataset(Dataset):

def __init__(self, root_dir, df, transforms = None):

self.root_dir = root_dir

self.df = df

self.transforms = transforms

def __len__(self):

return len(self.df)

def __getitem__(self, index):

img_path = self.df.iloc[index, -1]

img = cv2.imread(img_path)

img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

label = self.df.iloc[index, -3]

if self.transforms:

img = self.transforms(image = img)["image"]

return img, label

Augmentations & Transforms

data_transforms = {

"train" : A.Compose([A.Resize(CFG.img_size, CFG.img_size),

A.HorizontalFlip(p = 0.5),

A.RandomBrightnessContrast(brightness_limit = (-0.1, 0.1),

contrast_limit = (-0.1, 0.1),

p = 0.5),

A.Normalize(mean = [0.485, 0.456, 0.406],

std = [0.229, 0.224, 0.225],

max_pixel_value = 255.0,

p = 1.0),

ToTensorV2()], p = 1.),

"valid" : A.Compose([A.Resize(CFG.img_size, CFG.img_size),

A.Normalize(mean = [0.485, 0.456, 0.406],

std = [0.229, 0.224, 0.225],

max_pixel_value = 255.0,

p = 1.0),

ToTensorV2()], p = 1.)

}

Training Function

use Automatic Mixed Precision to spped up training process and Gradient Accumulation to increase bach size

Refer this Discussion to know more about mixed precision training

Refer this Discussion to know more about gradient acculation

def train_model(model, criterion, optimizer, scheduler, num_epochs, dataloaders, dataset_sizes, device, fold):

start = time.time()

best_model_wts = copy.deepcopy(model.state_dict())

best_loss = np.inf

history = defaultdict(list)

scaler = amp.GradScaler()

for step, eopch in enumerate(range(1, num_eopchs+1)):

print(f"Epoch {epoch}/{num_epochs}")

print("-" * 10)

# Each epoch has a training and validation phase

for phase in ['train', 'valid']:

if(phase == 'train'):

model.train() # Set model to training mode

else:

model.eval() # Set model to evaluation mode

running_loss = 0.0

# Iterate over data

for inputs, labels in tqdm(dataloaders[phase]):

inputs = inputs.to(CFG.device)

labels = labels.to(CFG.device)

# forward

# track history if only in train

with torch.set_grand_enabled(phase == 'train'):

with amp.autocast(enabled = True):

outputs = model(inputs)

loss = criterion(outputs, labels)

loss = loss / CFG.n_accumulate

# backward only if in training phae

if phase == "train":

scaler.scale(loss).backward()

# optimize only if in training phase

if phase == "train" and (step + 1) % CFG.n_accumulate == 0:

scaler.step(optimzier)

scaler.update()

scheduler.step()

# zero the parameter gradients

optimizer.zero_grad()

running_loss += loss.item() * inputs.size(0)

epoch_loss = running_loss/dataset_sizes[phase]

history[phase + ' loss'].append(epoch_loss)

print(f"{phase} Loss: {epoch_loss:.4f}")

# deep copy the model

if phase == 'valid' and epoch_loss <= best_loss:

best_loss = epoch_loss

best_model_wts = copy.deepcopy(model.state_dict())

PATH = f"Fold{fold}_{est_loss}_epoch_{epoch}.bin"

torch.save(model.state_dict(), PATH)

print()

end = time.time()

time_elapsed = end-start

print("Training complete in {time_elapsed // 3600:.0f}h {(time_elabsed % 3600) // 60:.0f}m {(time_elapsed % 3600) % 60:.0f}s")

print("Best Loss ", best_loss)

# load best model weights

model.load_state_dict(best_model_wts)

return model, history

def run_fold(model, criterion, optimizer, scheduler, device, fold, num_epochs = 10):

valid_df = df_train[df_train.fold == fold]

train_df = df_train[df_train.fold != fold]

train_data = ShopeeDataset(TRAIN_DIR, train_df, transforms = data_transforms['train'])

valid_data = ShopeeDataset(TRAIN_DIR, valid_df, transforms = data_transforms['valid'])

dataset_sizes = {

'train' : len(train_data),

'valid' : len(valid_data)

}

train_loader = DataLoader(dataset = train_data,

batch_size = CFG.batch_size,

num_worker = 4,

pin_memory = True, shuffle = True)

valid_loader = DataLoader(dataset = valid_data,

batch_size = CFG.batch_size,

num_worker = 4,

pin_memonty = True, shuffle = True)

dataloaders = {

"train" : train_loader,

"valid" : valid_loader

}

model, history = train_model(model, criterion, optimizer, scheduler, num_epochs,

dataloaders, dataset_sizes, device, fold)

return model, history

Load Model

model = timm.create_model(CFG.model_name, pretrained = True)

#> /home/rstudio/.local/share/r-miniconda/envs/r-reticulate/lib/python3.10/site-packages/timm/models/_factory.py:117: UserWarning: Mapping deprecated model name tf_efficientnet_b4_ns to current tf_efficientnet_b4.ns_jft_in1k.

#> model = create_fn(

in_features = model.classifier.in_features

model.classifier = nn.Linear(in_features, CFG.embedding_size)

out = model(torch.randn(1, 3, CFG.img_size, CFG.img_size))

print(f"Embedding shape : {out.shape}")

#> Embedding shape : torch.Size([1, 512])

model.to(CFG.device)

#> EfficientNet(

#> (conv_stem): Conv2dSame(3, 48, kernel_size=(3, 3), stride=(2, 2), bias=False)

#> (bn1): BatchNormAct2d(

#> 48, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (blocks): Sequential(

#> (0): Sequential(

#> (0): DepthwiseSeparableConv(

#> (conv_dw): Conv2d(48, 48, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=48, bias=False)

#> (bn1): BatchNormAct2d(

#> 48, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (aa): Identity()

#> (se): SqueezeExcite(

#> (conv_reduce): Conv2d(48, 12, kernel_size=(1, 1), stride=(1, 1))

#> (act1): SiLU(inplace=True)

#> (conv_expand): Conv2d(12, 48, kernel_size=(1, 1), stride=(1, 1))

#> (gate): Sigmoid()

#> )

#> (conv_pw): Conv2d(48, 24, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn2): BatchNormAct2d(

#> 24, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): Identity()

#> )

#> (drop_path): Identity()

#> )

#> (1): DepthwiseSeparableConv(

#> (conv_dw): Conv2d(24, 24, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=24, bias=False)

#> (bn1): BatchNormAct2d(

#> 24, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (aa): Identity()

#> (se): SqueezeExcite(

#> (conv_reduce): Conv2d(24, 6, kernel_size=(1, 1), stride=(1, 1))

#> (act1): SiLU(inplace=True)

#> (conv_expand): Conv2d(6, 24, kernel_size=(1, 1), stride=(1, 1))

#> (gate): Sigmoid()

#> )

#> (conv_pw): Conv2d(24, 24, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn2): BatchNormAct2d(

#> 24, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): Identity()

#> )

#> (drop_path): Identity()

#> )

#> )

#> (1): Sequential(

#> (0): InvertedResidual(

#> (conv_pw): Conv2d(24, 144, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn1): BatchNormAct2d(

#> 144, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (conv_dw): Conv2dSame(144, 144, kernel_size=(3, 3), stride=(2, 2), groups=144, bias=False)

#> (bn2): BatchNormAct2d(

#> 144, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (aa): Identity()

#> (se): SqueezeExcite(

#> (conv_reduce): Conv2d(144, 6, kernel_size=(1, 1), stride=(1, 1))

#> (act1): SiLU(inplace=True)

#> (conv_expand): Conv2d(6, 144, kernel_size=(1, 1), stride=(1, 1))

#> (gate): Sigmoid()

#> )

#> (conv_pwl): Conv2d(144, 32, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn3): BatchNormAct2d(

#> 32, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): Identity()

#> )

#> (drop_path): Identity()

#> )

#> (1): InvertedResidual(

#> (conv_pw): Conv2d(32, 192, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn1): BatchNormAct2d(

#> 192, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (conv_dw): Conv2d(192, 192, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=192, bias=False)

#> (bn2): BatchNormAct2d(

#> 192, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (aa): Identity()

#> (se): SqueezeExcite(

#> (conv_reduce): Conv2d(192, 8, kernel_size=(1, 1), stride=(1, 1))

#> (act1): SiLU(inplace=True)

#> (conv_expand): Conv2d(8, 192, kernel_size=(1, 1), stride=(1, 1))

#> (gate): Sigmoid()

#> )

#> (conv_pwl): Conv2d(192, 32, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn3): BatchNormAct2d(

#> 32, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): Identity()

#> )

#> (drop_path): Identity()

#> )

#> (2): InvertedResidual(

#> (conv_pw): Conv2d(32, 192, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn1): BatchNormAct2d(

#> 192, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (conv_dw): Conv2d(192, 192, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=192, bias=False)

#> (bn2): BatchNormAct2d(

#> 192, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (aa): Identity()

#> (se): SqueezeExcite(

#> (conv_reduce): Conv2d(192, 8, kernel_size=(1, 1), stride=(1, 1))

#> (act1): SiLU(inplace=True)

#> (conv_expand): Conv2d(8, 192, kernel_size=(1, 1), stride=(1, 1))

#> (gate): Sigmoid()

#> )

#> (conv_pwl): Conv2d(192, 32, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn3): BatchNormAct2d(

#> 32, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): Identity()

#> )

#> (drop_path): Identity()

#> )

#> (3): InvertedResidual(

#> (conv_pw): Conv2d(32, 192, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn1): BatchNormAct2d(

#> 192, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (conv_dw): Conv2d(192, 192, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=192, bias=False)

#> (bn2): BatchNormAct2d(

#> 192, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (aa): Identity()

#> (se): SqueezeExcite(

#> (conv_reduce): Conv2d(192, 8, kernel_size=(1, 1), stride=(1, 1))

#> (act1): SiLU(inplace=True)

#> (conv_expand): Conv2d(8, 192, kernel_size=(1, 1), stride=(1, 1))

#> (gate): Sigmoid()

#> )

#> (conv_pwl): Conv2d(192, 32, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn3): BatchNormAct2d(

#> 32, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): Identity()

#> )

#> (drop_path): Identity()

#> )

#> )

#> (2): Sequential(

#> (0): InvertedResidual(

#> (conv_pw): Conv2d(32, 192, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn1): BatchNormAct2d(

#> 192, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (conv_dw): Conv2dSame(192, 192, kernel_size=(5, 5), stride=(2, 2), groups=192, bias=False)

#> (bn2): BatchNormAct2d(

#> 192, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (aa): Identity()

#> (se): SqueezeExcite(

#> (conv_reduce): Conv2d(192, 8, kernel_size=(1, 1), stride=(1, 1))

#> (act1): SiLU(inplace=True)

#> (conv_expand): Conv2d(8, 192, kernel_size=(1, 1), stride=(1, 1))

#> (gate): Sigmoid()

#> )

#> (conv_pwl): Conv2d(192, 56, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn3): BatchNormAct2d(

#> 56, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): Identity()

#> )

#> (drop_path): Identity()

#> )

#> (1): InvertedResidual(

#> (conv_pw): Conv2d(56, 336, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn1): BatchNormAct2d(

#> 336, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (conv_dw): Conv2d(336, 336, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2), groups=336, bias=False)

#> (bn2): BatchNormAct2d(

#> 336, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (aa): Identity()

#> (se): SqueezeExcite(

#> (conv_reduce): Conv2d(336, 14, kernel_size=(1, 1), stride=(1, 1))

#> (act1): SiLU(inplace=True)

#> (conv_expand): Conv2d(14, 336, kernel_size=(1, 1), stride=(1, 1))

#> (gate): Sigmoid()

#> )

#> (conv_pwl): Conv2d(336, 56, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn3): BatchNormAct2d(

#> 56, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): Identity()

#> )

#> (drop_path): Identity()

#> )

#> (2): InvertedResidual(

#> (conv_pw): Conv2d(56, 336, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn1): BatchNormAct2d(

#> 336, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (conv_dw): Conv2d(336, 336, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2), groups=336, bias=False)

#> (bn2): BatchNormAct2d(

#> 336, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (aa): Identity()

#> (se): SqueezeExcite(

#> (conv_reduce): Conv2d(336, 14, kernel_size=(1, 1), stride=(1, 1))

#> (act1): SiLU(inplace=True)

#> (conv_expand): Conv2d(14, 336, kernel_size=(1, 1), stride=(1, 1))

#> (gate): Sigmoid()

#> )

#> (conv_pwl): Conv2d(336, 56, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn3): BatchNormAct2d(

#> 56, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): Identity()

#> )

#> (drop_path): Identity()

#> )

#> (3): InvertedResidual(

#> (conv_pw): Conv2d(56, 336, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn1): BatchNormAct2d(

#> 336, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (conv_dw): Conv2d(336, 336, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2), groups=336, bias=False)

#> (bn2): BatchNormAct2d(

#> 336, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (aa): Identity()

#> (se): SqueezeExcite(

#> (conv_reduce): Conv2d(336, 14, kernel_size=(1, 1), stride=(1, 1))

#> (act1): SiLU(inplace=True)

#> (conv_expand): Conv2d(14, 336, kernel_size=(1, 1), stride=(1, 1))

#> (gate): Sigmoid()

#> )

#> (conv_pwl): Conv2d(336, 56, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn3): BatchNormAct2d(

#> 56, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): Identity()

#> )

#> (drop_path): Identity()

#> )

#> )

#> (3): Sequential(

#> (0): InvertedResidual(

#> (conv_pw): Conv2d(56, 336, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn1): BatchNormAct2d(

#> 336, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (conv_dw): Conv2dSame(336, 336, kernel_size=(3, 3), stride=(2, 2), groups=336, bias=False)

#> (bn2): BatchNormAct2d(

#> 336, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (aa): Identity()

#> (se): SqueezeExcite(

#> (conv_reduce): Conv2d(336, 14, kernel_size=(1, 1), stride=(1, 1))

#> (act1): SiLU(inplace=True)

#> (conv_expand): Conv2d(14, 336, kernel_size=(1, 1), stride=(1, 1))

#> (gate): Sigmoid()

#> )

#> (conv_pwl): Conv2d(336, 112, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn3): BatchNormAct2d(

#> 112, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): Identity()

#> )

#> (drop_path): Identity()

#> )

#> (1): InvertedResidual(

#> (conv_pw): Conv2d(112, 672, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn1): BatchNormAct2d(

#> 672, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (conv_dw): Conv2d(672, 672, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=672, bias=False)

#> (bn2): BatchNormAct2d(

#> 672, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (aa): Identity()

#> (se): SqueezeExcite(

#> (conv_reduce): Conv2d(672, 28, kernel_size=(1, 1), stride=(1, 1))

#> (act1): SiLU(inplace=True)

#> (conv_expand): Conv2d(28, 672, kernel_size=(1, 1), stride=(1, 1))

#> (gate): Sigmoid()

#> )

#> (conv_pwl): Conv2d(672, 112, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn3): BatchNormAct2d(

#> 112, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): Identity()

#> )

#> (drop_path): Identity()

#> )

#> (2): InvertedResidual(

#> (conv_pw): Conv2d(112, 672, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn1): BatchNormAct2d(

#> 672, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (conv_dw): Conv2d(672, 672, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=672, bias=False)

#> (bn2): BatchNormAct2d(

#> 672, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (aa): Identity()

#> (se): SqueezeExcite(

#> (conv_reduce): Conv2d(672, 28, kernel_size=(1, 1), stride=(1, 1))

#> (act1): SiLU(inplace=True)

#> (conv_expand): Conv2d(28, 672, kernel_size=(1, 1), stride=(1, 1))

#> (gate): Sigmoid()

#> )

#> (conv_pwl): Conv2d(672, 112, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn3): BatchNormAct2d(

#> 112, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): Identity()

#> )

#> (drop_path): Identity()

#> )

#> (3): InvertedResidual(

#> (conv_pw): Conv2d(112, 672, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn1): BatchNormAct2d(

#> 672, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (conv_dw): Conv2d(672, 672, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=672, bias=False)

#> (bn2): BatchNormAct2d(

#> 672, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (aa): Identity()

#> (se): SqueezeExcite(

#> (conv_reduce): Conv2d(672, 28, kernel_size=(1, 1), stride=(1, 1))

#> (act1): SiLU(inplace=True)

#> (conv_expand): Conv2d(28, 672, kernel_size=(1, 1), stride=(1, 1))

#> (gate): Sigmoid()

#> )

#> (conv_pwl): Conv2d(672, 112, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn3): BatchNormAct2d(

#> 112, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): Identity()

#> )

#> (drop_path): Identity()

#> )

#> (4): InvertedResidual(

#> (conv_pw): Conv2d(112, 672, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn1): BatchNormAct2d(

#> 672, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (conv_dw): Conv2d(672, 672, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=672, bias=False)

#> (bn2): BatchNormAct2d(

#> 672, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (aa): Identity()

#> (se): SqueezeExcite(

#> (conv_reduce): Conv2d(672, 28, kernel_size=(1, 1), stride=(1, 1))

#> (act1): SiLU(inplace=True)

#> (conv_expand): Conv2d(28, 672, kernel_size=(1, 1), stride=(1, 1))

#> (gate): Sigmoid()

#> )

#> (conv_pwl): Conv2d(672, 112, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn3): BatchNormAct2d(

#> 112, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): Identity()

#> )

#> (drop_path): Identity()

#> )

#> (5): InvertedResidual(

#> (conv_pw): Conv2d(112, 672, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn1): BatchNormAct2d(

#> 672, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (conv_dw): Conv2d(672, 672, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=672, bias=False)

#> (bn2): BatchNormAct2d(

#> 672, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (aa): Identity()

#> (se): SqueezeExcite(

#> (conv_reduce): Conv2d(672, 28, kernel_size=(1, 1), stride=(1, 1))

#> (act1): SiLU(inplace=True)

#> (conv_expand): Conv2d(28, 672, kernel_size=(1, 1), stride=(1, 1))

#> (gate): Sigmoid()

#> )

#> (conv_pwl): Conv2d(672, 112, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn3): BatchNormAct2d(

#> 112, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): Identity()

#> )

#> (drop_path): Identity()

#> )

#> )

#> (4): Sequential(

#> (0): InvertedResidual(

#> (conv_pw): Conv2d(112, 672, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn1): BatchNormAct2d(

#> 672, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (conv_dw): Conv2d(672, 672, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2), groups=672, bias=False)

#> (bn2): BatchNormAct2d(

#> 672, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (aa): Identity()

#> (se): SqueezeExcite(

#> (conv_reduce): Conv2d(672, 28, kernel_size=(1, 1), stride=(1, 1))

#> (act1): SiLU(inplace=True)

#> (conv_expand): Conv2d(28, 672, kernel_size=(1, 1), stride=(1, 1))

#> (gate): Sigmoid()

#> )

#> (conv_pwl): Conv2d(672, 160, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn3): BatchNormAct2d(

#> 160, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): Identity()

#> )

#> (drop_path): Identity()

#> )

#> (1): InvertedResidual(

#> (conv_pw): Conv2d(160, 960, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn1): BatchNormAct2d(

#> 960, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (conv_dw): Conv2d(960, 960, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2), groups=960, bias=False)

#> (bn2): BatchNormAct2d(

#> 960, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (aa): Identity()

#> (se): SqueezeExcite(

#> (conv_reduce): Conv2d(960, 40, kernel_size=(1, 1), stride=(1, 1))

#> (act1): SiLU(inplace=True)

#> (conv_expand): Conv2d(40, 960, kernel_size=(1, 1), stride=(1, 1))

#> (gate): Sigmoid()

#> )

#> (conv_pwl): Conv2d(960, 160, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn3): BatchNormAct2d(

#> 160, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): Identity()

#> )

#> (drop_path): Identity()

#> )

#> (2): InvertedResidual(

#> (conv_pw): Conv2d(160, 960, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn1): BatchNormAct2d(

#> 960, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (conv_dw): Conv2d(960, 960, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2), groups=960, bias=False)

#> (bn2): BatchNormAct2d(

#> 960, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (aa): Identity()

#> (se): SqueezeExcite(

#> (conv_reduce): Conv2d(960, 40, kernel_size=(1, 1), stride=(1, 1))

#> (act1): SiLU(inplace=True)

#> (conv_expand): Conv2d(40, 960, kernel_size=(1, 1), stride=(1, 1))

#> (gate): Sigmoid()

#> )

#> (conv_pwl): Conv2d(960, 160, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn3): BatchNormAct2d(

#> 160, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): Identity()

#> )

#> (drop_path): Identity()

#> )

#> (3): InvertedResidual(

#> (conv_pw): Conv2d(160, 960, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn1): BatchNormAct2d(

#> 960, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (conv_dw): Conv2d(960, 960, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2), groups=960, bias=False)

#> (bn2): BatchNormAct2d(

#> 960, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (aa): Identity()

#> (se): SqueezeExcite(

#> (conv_reduce): Conv2d(960, 40, kernel_size=(1, 1), stride=(1, 1))

#> (act1): SiLU(inplace=True)

#> (conv_expand): Conv2d(40, 960, kernel_size=(1, 1), stride=(1, 1))

#> (gate): Sigmoid()

#> )

#> (conv_pwl): Conv2d(960, 160, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn3): BatchNormAct2d(

#> 160, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): Identity()

#> )

#> (drop_path): Identity()

#> )

#> (4): InvertedResidual(

#> (conv_pw): Conv2d(160, 960, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn1): BatchNormAct2d(

#> 960, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (conv_dw): Conv2d(960, 960, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2), groups=960, bias=False)

#> (bn2): BatchNormAct2d(

#> 960, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (aa): Identity()

#> (se): SqueezeExcite(

#> (conv_reduce): Conv2d(960, 40, kernel_size=(1, 1), stride=(1, 1))

#> (act1): SiLU(inplace=True)

#> (conv_expand): Conv2d(40, 960, kernel_size=(1, 1), stride=(1, 1))

#> (gate): Sigmoid()

#> )

#> (conv_pwl): Conv2d(960, 160, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn3): BatchNormAct2d(

#> 160, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): Identity()

#> )

#> (drop_path): Identity()

#> )

#> (5): InvertedResidual(

#> (conv_pw): Conv2d(160, 960, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn1): BatchNormAct2d(

#> 960, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (conv_dw): Conv2d(960, 960, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2), groups=960, bias=False)

#> (bn2): BatchNormAct2d(

#> 960, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (aa): Identity()

#> (se): SqueezeExcite(

#> (conv_reduce): Conv2d(960, 40, kernel_size=(1, 1), stride=(1, 1))

#> (act1): SiLU(inplace=True)

#> (conv_expand): Conv2d(40, 960, kernel_size=(1, 1), stride=(1, 1))

#> (gate): Sigmoid()

#> )

#> (conv_pwl): Conv2d(960, 160, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn3): BatchNormAct2d(

#> 160, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): Identity()

#> )

#> (drop_path): Identity()

#> )

#> )

#> (5): Sequential(

#> (0): InvertedResidual(

#> (conv_pw): Conv2d(160, 960, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn1): BatchNormAct2d(

#> 960, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (conv_dw): Conv2dSame(960, 960, kernel_size=(5, 5), stride=(2, 2), groups=960, bias=False)

#> (bn2): BatchNormAct2d(

#> 960, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (aa): Identity()

#> (se): SqueezeExcite(

#> (conv_reduce): Conv2d(960, 40, kernel_size=(1, 1), stride=(1, 1))

#> (act1): SiLU(inplace=True)

#> (conv_expand): Conv2d(40, 960, kernel_size=(1, 1), stride=(1, 1))

#> (gate): Sigmoid()

#> )

#> (conv_pwl): Conv2d(960, 272, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn3): BatchNormAct2d(

#> 272, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): Identity()

#> )

#> (drop_path): Identity()

#> )

#> (1): InvertedResidual(

#> (conv_pw): Conv2d(272, 1632, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn1): BatchNormAct2d(

#> 1632, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (conv_dw): Conv2d(1632, 1632, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2), groups=1632, bias=False)

#> (bn2): BatchNormAct2d(

#> 1632, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (aa): Identity()

#> (se): SqueezeExcite(

#> (conv_reduce): Conv2d(1632, 68, kernel_size=(1, 1), stride=(1, 1))

#> (act1): SiLU(inplace=True)

#> (conv_expand): Conv2d(68, 1632, kernel_size=(1, 1), stride=(1, 1))

#> (gate): Sigmoid()

#> )

#> (conv_pwl): Conv2d(1632, 272, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn3): BatchNormAct2d(

#> 272, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): Identity()

#> )

#> (drop_path): Identity()

#> )

#> (2): InvertedResidual(

#> (conv_pw): Conv2d(272, 1632, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn1): BatchNormAct2d(

#> 1632, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (conv_dw): Conv2d(1632, 1632, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2), groups=1632, bias=False)

#> (bn2): BatchNormAct2d(

#> 1632, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (aa): Identity()

#> (se): SqueezeExcite(

#> (conv_reduce): Conv2d(1632, 68, kernel_size=(1, 1), stride=(1, 1))

#> (act1): SiLU(inplace=True)

#> (conv_expand): Conv2d(68, 1632, kernel_size=(1, 1), stride=(1, 1))

#> (gate): Sigmoid()

#> )

#> (conv_pwl): Conv2d(1632, 272, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn3): BatchNormAct2d(

#> 272, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): Identity()

#> )

#> (drop_path): Identity()

#> )

#> (3): InvertedResidual(

#> (conv_pw): Conv2d(272, 1632, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn1): BatchNormAct2d(

#> 1632, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (conv_dw): Conv2d(1632, 1632, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2), groups=1632, bias=False)

#> (bn2): BatchNormAct2d(

#> 1632, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (aa): Identity()

#> (se): SqueezeExcite(

#> (conv_reduce): Conv2d(1632, 68, kernel_size=(1, 1), stride=(1, 1))

#> (act1): SiLU(inplace=True)

#> (conv_expand): Conv2d(68, 1632, kernel_size=(1, 1), stride=(1, 1))

#> (gate): Sigmoid()

#> )

#> (conv_pwl): Conv2d(1632, 272, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn3): BatchNormAct2d(

#> 272, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): Identity()

#> )

#> (drop_path): Identity()

#> )

#> (4): InvertedResidual(

#> (conv_pw): Conv2d(272, 1632, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn1): BatchNormAct2d(

#> 1632, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (conv_dw): Conv2d(1632, 1632, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2), groups=1632, bias=False)

#> (bn2): BatchNormAct2d(

#> 1632, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (aa): Identity()

#> (se): SqueezeExcite(

#> (conv_reduce): Conv2d(1632, 68, kernel_size=(1, 1), stride=(1, 1))

#> (act1): SiLU(inplace=True)

#> (conv_expand): Conv2d(68, 1632, kernel_size=(1, 1), stride=(1, 1))

#> (gate): Sigmoid()

#> )

#> (conv_pwl): Conv2d(1632, 272, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn3): BatchNormAct2d(

#> 272, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): Identity()

#> )

#> (drop_path): Identity()

#> )

#> (5): InvertedResidual(

#> (conv_pw): Conv2d(272, 1632, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn1): BatchNormAct2d(

#> 1632, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (conv_dw): Conv2d(1632, 1632, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2), groups=1632, bias=False)

#> (bn2): BatchNormAct2d(

#> 1632, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (aa): Identity()

#> (se): SqueezeExcite(

#> (conv_reduce): Conv2d(1632, 68, kernel_size=(1, 1), stride=(1, 1))

#> (act1): SiLU(inplace=True)

#> (conv_expand): Conv2d(68, 1632, kernel_size=(1, 1), stride=(1, 1))

#> (gate): Sigmoid()

#> )

#> (conv_pwl): Conv2d(1632, 272, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn3): BatchNormAct2d(

#> 272, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): Identity()

#> )

#> (drop_path): Identity()

#> )

#> (6): InvertedResidual(

#> (conv_pw): Conv2d(272, 1632, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn1): BatchNormAct2d(

#> 1632, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (conv_dw): Conv2d(1632, 1632, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2), groups=1632, bias=False)

#> (bn2): BatchNormAct2d(

#> 1632, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (aa): Identity()

#> (se): SqueezeExcite(

#> (conv_reduce): Conv2d(1632, 68, kernel_size=(1, 1), stride=(1, 1))

#> (act1): SiLU(inplace=True)

#> (conv_expand): Conv2d(68, 1632, kernel_size=(1, 1), stride=(1, 1))

#> (gate): Sigmoid()

#> )

#> (conv_pwl): Conv2d(1632, 272, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn3): BatchNormAct2d(

#> 272, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): Identity()

#> )

#> (drop_path): Identity()

#> )

#> (7): InvertedResidual(

#> (conv_pw): Conv2d(272, 1632, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn1): BatchNormAct2d(

#> 1632, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (conv_dw): Conv2d(1632, 1632, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2), groups=1632, bias=False)

#> (bn2): BatchNormAct2d(

#> 1632, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (aa): Identity()

#> (se): SqueezeExcite(

#> (conv_reduce): Conv2d(1632, 68, kernel_size=(1, 1), stride=(1, 1))

#> (act1): SiLU(inplace=True)

#> (conv_expand): Conv2d(68, 1632, kernel_size=(1, 1), stride=(1, 1))

#> (gate): Sigmoid()

#> )

#> (conv_pwl): Conv2d(1632, 272, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn3): BatchNormAct2d(

#> 272, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): Identity()

#> )

#> (drop_path): Identity()

#> )

#> )

#> (6): Sequential(

#> (0): InvertedResidual(

#> (conv_pw): Conv2d(272, 1632, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn1): BatchNormAct2d(

#> 1632, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (conv_dw): Conv2d(1632, 1632, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=1632, bias=False)

#> (bn2): BatchNormAct2d(

#> 1632, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (aa): Identity()

#> (se): SqueezeExcite(

#> (conv_reduce): Conv2d(1632, 68, kernel_size=(1, 1), stride=(1, 1))

#> (act1): SiLU(inplace=True)

#> (conv_expand): Conv2d(68, 1632, kernel_size=(1, 1), stride=(1, 1))

#> (gate): Sigmoid()

#> )

#> (conv_pwl): Conv2d(1632, 448, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn3): BatchNormAct2d(

#> 448, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): Identity()

#> )

#> (drop_path): Identity()

#> )

#> (1): InvertedResidual(

#> (conv_pw): Conv2d(448, 2688, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn1): BatchNormAct2d(

#> 2688, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (conv_dw): Conv2d(2688, 2688, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1), groups=2688, bias=False)

#> (bn2): BatchNormAct2d(

#> 2688, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (aa): Identity()

#> (se): SqueezeExcite(

#> (conv_reduce): Conv2d(2688, 112, kernel_size=(1, 1), stride=(1, 1))

#> (act1): SiLU(inplace=True)

#> (conv_expand): Conv2d(112, 2688, kernel_size=(1, 1), stride=(1, 1))

#> (gate): Sigmoid()

#> )

#> (conv_pwl): Conv2d(2688, 448, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn3): BatchNormAct2d(

#> 448, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): Identity()

#> )

#> (drop_path): Identity()

#> )

#> )

#> )

#> (conv_head): Conv2d(448, 1792, kernel_size=(1, 1), stride=(1, 1), bias=False)

#> (bn2): BatchNormAct2d(

#> 1792, eps=0.001, momentum=0.1, affine=True, track_running_stats=True

#> (drop): Identity()

#> (act): SiLU(inplace=True)

#> )

#> (global_pool): SelectAdaptivePool2d(pool_type=avg, flatten=Flatten(start_dim=1, end_dim=-1))

#> (classifier): Linear(in_features=1792, out_features=512, bias=True)

#> )